15 Discrete latent variables

mixture models and their solutions, because all deep generative models use variational inference, we also gradually build to it.

15.1 K-means clustering

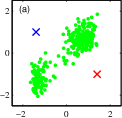

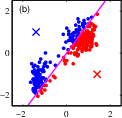

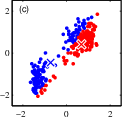

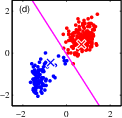

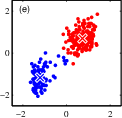

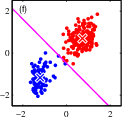

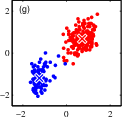

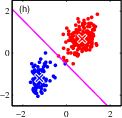

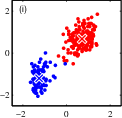

update assignments (E) and centers (M) till convergence

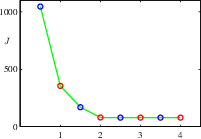

plot of the cost function after each E step (in blue) and M step (in red).

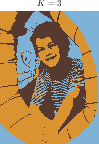

15.1.1 Image segmentation

applying K-means to image segmentation, with varying Ks

15.2 Mixture of Gaussian

graph representation of mixture model (VERY SIMPLE!)

\[ \ln p(\mathbf{X}|\boldsymbol{\pi}, \boldsymbol{\mu}, \boldsymbol{\Sigma}) = \sum_{n=1}^{N} \ln \left\{ \sum_{k=1}^{K} \pi_k \mathcal{N}(\mathbf{x}_n|\boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k) \right\} \]